Contents

DynamoDB legend and AWS hero Alex Debrie joins two of Trilogy's SVPs of Software Engineering to geek out about the future of scaleability—and how it's the key to building tech products that win every time.

We were honored to be joined by Alex Debrie—the Brad Pitt of scaleability—for this panel conversation. He geeked out with two of Crossover's most successful hires (now SVPs of Software Engineering at Trilogy) about their scaling experiences, and war stories featuring blobs and nodes.

If you're a senior software engineer or coder, you know how important scaleability is. Mastering it is vital to the success of every webscale product – which is why deep knowledge of it gives you a massive edge with employers. This video is for you.

Running time: 30 minutes

Here's who's on the panel:

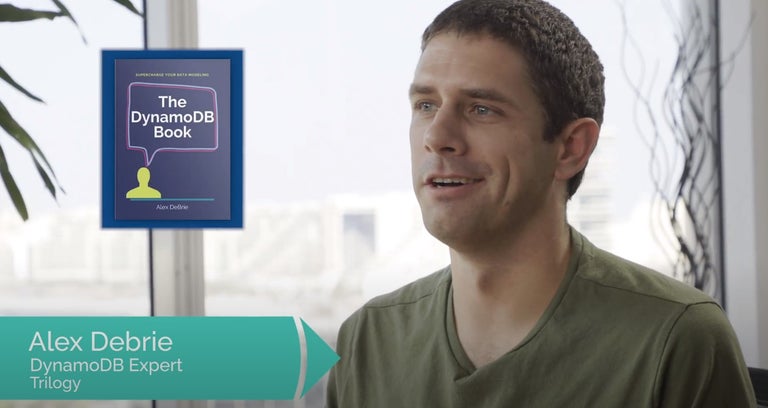

Alex Debrie

Author of the DynamoDB book and all round DynamoDB guru. He is an AWS data hero for his community work with DynamoDB. He also does a lot of Dynamo DB consulting and training in the community.

Badri Varadarajan - San Jose, California

As Portfolio CTO at Trilogy, Badri builds a lot of products, including most of them to high levels of customization. The next generation of CRM is on DynamoDB.

WATCH Badri Varadarajan'S INTERVIEW

Pavan Kumar - Bangalore, India

Pavan is an SVP of Engineering, and responsible for building cloud CRM product and anything related to upscale product lines.

Alex, Let's Start By Asking What You Think About Trilogy?

I think Trilogy is a high-performance place. It has people that care about the work they do. They care about doing application design reviews well, making sure the engineering stuff makes sense and making sure they understand the product and business needs too.

Sometimes you see organizations where engineers want to focus so much on just the core engineering problems - they think that's the fun part. But if you're not tying it back to solving a customer's need and, and understanding how it works with the product and understanding those trade offs, you know, you might be building something that's not super useful.

Let's Have The DynamoDB Guru Introduce DynamoDB

I love DynamoDB! I think what's so interesting about DynamoDB is you see it coming out of the amazon.com experience, scaling applications to web scale. Like scaling amazon.com retail, whether that's the shopping cart, the fulfilment, whatever that is, scaling those up and getting it to truly web scale.

DynamoDB is a huge portion of how that works. And, I think that's interesting is relational databases graded a lot of things, but they wanted to rethink some of the constraints of databases and some of the features of databases to really enable that web scale.

How Does DynamoDB Help Scale At Trilogy

DynamoDB makes you question what features do we truly need? What access patterns do we really need to support, and then what ones can we not use because maybe they don't scale as well. The interesting thing about Dynamo is that it scales really well horizontally.

There's an important concept called the partition key which can be included on all your items. This partition key helps distribute data across multiple machines, allowing you to scale up really well horizontally as you grow, and giving you consistent performance as you go.

But DynamoDB is also removing some features like joins and aggregations that maybe don't scale as well, because you ended up having these unbounded queries, right? They can't know in advance how big that query is going to be and give you that same consistent performance, capabilities or guarantees around that.

But Modeling Is Different With DynamoDB?

DynamoDB has a lot of great properties. We're talking around scalability, around how well it works with serverless and app sync and things like that. But modeling with Dynamo is very different than modeling in a relational database. And I think a lot of people have years and years of experience of modeling with relational.

Sometimes they’ll try and bring that to Dynamo and it just works in a different way. And they're not getting all the benefits of Dynamo if they're modeling in a normalized relational way in their DynamoDB table. So with Dynamo you're going to de-normalize your data a lot more.

You might duplicate some information onto multiple items to save you from making an additional read, requesting up to a parent item or something like that. Rather than having a separate table for many relationships. Maybe you just duplicated onto an item and just sort of relationship represented on an item itself on its parent item and hold it that way.

What's The Issue Of Scaling With Relational Databases?

Relational databases work really well when you're testing locally or in your staging environment. Early on in your application life cycle, when you have plenty of RAM, plenty of memory to fit all that data in memory.

But as you get more data, as you start to get more and more concurrent connections and queries against your database, that performance curve slows over time. That latency gets higher and higher. It becomes difficult to know how to debug that. You know, maybe at the beginning you can add more indexes or you can increase your instance size, but at some point, you've got all the indexes you need, you're really maxing out your instance size as big as you can get, and you're still having issues scaling.

It's the fundamental nature of these relational databases. They generally need to be centrally located on one instance, and you're vertically expanding that rather than horizontally partitioning it.

And Dynamo Can Help Future Proof?

DynamoDB is different. It goes across all these different partitions. If you have a 10 terabyte table, that request comes into DynamoDB, it's going to look at that partition key and immediately find out which partition you're going to. And immediately you've reduced your 10 terabyte table down to a 10 gigabyte partition you're searching around.

It's a lot more consistent scaling, cause they just add more partitions as you go.

I think if you work with most databases, they'll just sort of take on load, take on load, take on load until they start to slow down or they fall over. Dynamo is going to prevent you from doing that in certain ways.

DynamoDB also forces you to think up front about future requirements and how much load it can handle. It's nice to have these clear, harsh, bright lines in advance where you can model around those as an engineer and think, okay, I can't go beyond that. Am I going to need to go beyond that? If I do, I think about that upfront rather than two years into my application, I have a billion records in there. I need to figure out how to move stuff around to make it work now.

Trilogy Has Experienced This With A Few Projects, Right?

Yes, we have something like four or five different CRM products in our portfolio, and they're all on-prem. We're trying to modernize them, and one part of that is just getting them to scale. A problem with large fad products right now is that with on-prem it's very hard to scale them for larger customers. But the other part of that is also scalability in complexity, not just in terms of users, accounts and so on.

We want to have lots of customization capabilities. So the whole point of modernizing it is to scale in these two dimensions.

There is an app called Siemen. We've actually built it and it has shown crazy amounts of scale. I think it can handle web scale, things like accounts and subscribers. We're also building something for the Telco vertical, which is super interesting. We have some deep application logic and charging, which is adjacent, also built on DynamoDB.

Is There A Real Use Case You Can Share?

So when we are moving from on-prem to the cloud, or indeed, even from another cloud hosted CRM, one of the things we can do is to build an application while still letting people use their CRM. We're using that existing interface for their day jobs?

So there's an on-prem CRM. We just hooked up all that data into the cloud. And now we can quickly put together a customer outreach application or a marketing application, using DynamoDB dicing nicely with lots of other services. So we can tie it up with pinpoint and do an outbound marketing application.

We can tie it up with Kendra, do a semantic search, all kinds of interesting things. Outbound marketing has been pretty interesting for us. Essentially what happened is it's a set of users using CRM, and then just their marketing department gets an application that's separate. That they can use to do campaigns and monitor them and scorecard them and so on.

So that becomes really interesting as a use case.

You Had An Interesting Scaling War Story Badri?

Yep. Actually an interesting war story on that is in relation to another application built on Postgres.

Essentially all we knew was that it was killing the database and the architect who built it was no longer there. So it's like some other DBA looking at all the queries and trying to figure out which is the most damaging query. And it turned out it was just a Cron job running, generating a report, which nobody ever read.

So it's like DynamoDB forces you to not do things like that, which is important for future proofing scaling.

How Is DynamoDB Scaling Different From NoSQL?

The most interesting difference is between DynamoDB and MongoDB. These are probably two of the more popular noSQL databases and just a total difference data modeling philosophy, aside from the partition key. DynamoDB is going to be kind of strict, restrict you. Even if you want to do something that might feel good now it will say, “sorry, you have to eat your vegetables.”

You have to think about scaling up front. We're not going to let you do the wild and crazy thing, because we don't want you to get two or three years down the road, and then it doesn't scale anymore.

It means it's a little more inflexible user experience, and you have to learn more about modeling early on, but it's going to work longer I think in the long run. I think if you contrast that with Mongo DB, it's a lot of the same partitioning principles, but there are a lot more flexible on what they'll allow for data modeling patterns, it re-indexing. And if you just think about it, both of these for your primary access pattern are going to have a partition key, a shard key to distribute your data.

A Blob War Story Badri. Please Tell.

Postgres war story again. We ran this monster machine 24XL. We were doing this task where we were upgrading from Intel to Graviton now. And then we realized when we're doing it. We're looking at, okay, what's in this database. It turns out 30% of it was blob.

But How Do Blobs Impact Scaling?

The most common use case we have for example, "Let's add attachments to this, add blobs to this field." It's hardly anything. "Let's put a three form blob into this". It's so easy to fall into that pit.

And then when you hit that one MB limit, it says, "No, this is not the right way to do that". It literally forces you to say, "No, it might look really easy to do it right now." The moment your records score is so huge and all of these nodes and blobs add into these layers. Then you start seeing scaling issues.

Realizing that quite late in the equation, that one MB limit would kind of force you to say, “No, let's not keep blobs. That means let's give the blobs out somewhere else.”

We Bet You See This All The Time, Alex?

Blobs and logs. Yep - I see it all the time. It's actually hard to investigate that stuff and people don't investigate it, so they just scale it up and then they’re like, wait, why are we spending thousands a month on this giant database. And it's hoarding holding these giant blobs that are never even accessed. So it just makes that more apparent.

Badri, Let's Recap The Future Of Scalability At Trilogy.

We are building this stable base and want to spend the next 10 years innovating in the application layer.

Obviously there's a big learning curve to get the foundation right. To make it support all kinds of applications, and getting customization right is something very important for us. Obviously when you're making a big bet, not only in terms of like customizing CRM, but building different applications on top of this.

The big challenges we see are how easy it is to get data out into something else that you want, that you need for your application. So that side with all the testing we're very happy with the scale of DynamoDB and the storage layer itself.

The problem is going to be, how well can you customize that data and stream it out to other places?

Pavan, Your Thoughts?

The most important fact I see as an implementer is driving this with devs and people who are doing it. If we don't want this to be a system where like people remember these patterns and do it on an individual basis. I think it should be qualified into a system layer where these best practices are kept into the layer and then developers thinking about it daily, saying, "How can I handle this? If there's a blob, what should I do?" Instead, it's a platform which is handling this, and that's where a lot of investment is going from our site to make sure these best practices get qualified into the platform.

And hence we don't need to repeat these mistakes. Anybody coming into the team, or any new application to be done on this platform, by default gets the scale.

Then we win every time. That's how I see the future at this point.