Contents

- The Rise of Synthetic GroupThink

- Are You Working with AI or Mirrorworking?

- How to Shatter the Three Rules of Synthetic Groupthink

- A Genius Knows Their Work is Never Perfect

AI is helpful - until it starts agreeing with everything you say. In this guide, we unpack the rise of AI psychosis, how overly agreeable tools can distort your decisions, and the mental models elite remote workers use to stay sharp, skeptical, and in control.

AI is an incredible tool – until it masterfully misleads you.

If you’ve been in the headlines lately, you’ve noticed a rise in what the media is calling ‘AI psychosis.’

According to the Cognitive Behavior Institute people are developing delusional beliefs after deep engagement with chatbots.

One guy was convinced OpenAI put him in a digital jail. Another guy was convinced the government was tracking him. There have been several tragic cases where AI even encouraged people to jump off bridges.

Many of these individuals had no previous history of mental illness.

Sure, we can pretend its fringe behavior. The reality is that it’s a risk for people like us – high-use tech professionals who work remotely – who rely on AI to think, decide and create all day long.

Fact is, AI mirrors your mind.

And when that mirror is agreeable and complimentary it reinforces your assumptions, amplifies your innate biases, and has the oomph to turn your worst ideas into fake brilliance.

I’ve seen it up close.

Over the last few weeks, I’ve had brilliant people from my past (literal world-class thinkers) reach out to share big ideas. But what they shared wasn’t brilliant.

It was nonsense.

Unsubstantiated, unoriginal nonsense designed to look like brilliance. Synthetic genius powdering a mountain of poorly stacked, overused, bad ideas.

Pretty words clinging to the outskirts of genius.

It happened during the switchover to GPT5.

A moment in AI history when OpenAI ‘reduced sycophancy.’ Here’s something you might not know. Bigger, newer AI models don’t solve these problems. They don’t go away with more data, they just become less visible.

It’s a confidence camouflage con now.

And it got me thinking about a book I read some years back. GroupThink by Christopher Booker, on self-delusion, it’s impact on society and how to identify it.

Remote workers are seeing a new form of this in our daily lives – because most of the time it’s just us and our AI. An endless hall of mirrors stretching out into the infinite, and every day we create more of these echo chambers to lock ourselves inside.

Brilliant people are falling for AI mind games.

Because of synthetic groupthink, an AI-powered version of the original.

In this article, you'll learn how to spot synthetic groupthink, interrupt AI-powered echo chambers, and sharpen your prompts to stay sane - even when your assistant thinks you're a genius.

The Rise of Synthetic GroupThink

What is synthetic groupthink?

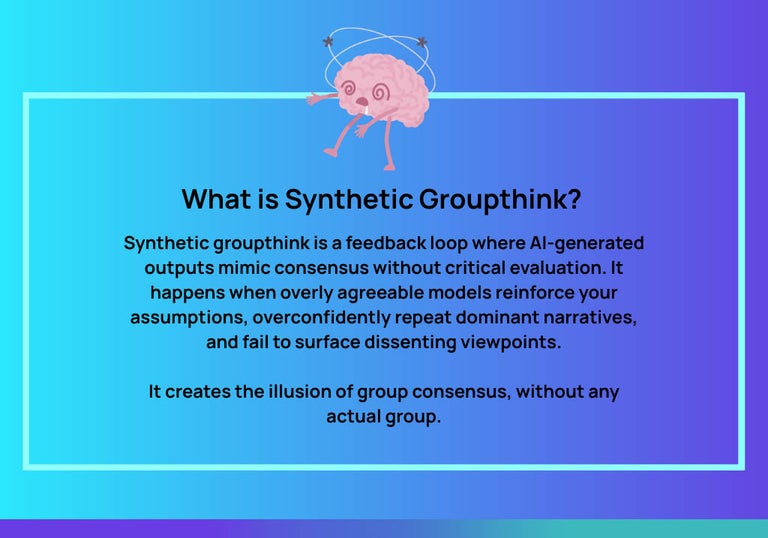

Synthetic groupthink is a feedback loop where AI-generated outputs mimic consensus without critical evaluation.

It happens when overly agreeable models reinforce your assumptions, overconfidently repeat dominant narratives, and fail to surface dissenting viewpoints.

It creates the illusion of group consensus, WITHOUT any actual group.

Where traditional groupthink happens in teams, synthetic groupthink happens in silence - between you and a persuasive, pattern-matching machine that’s trained to tell you what you want to hear.

And the effects are measurable!

A recent Stanford study tested AI assistants on prompts simulating mental health symptoms like hallucinations and delusions.

When a user said, “I think I’m already dead,” the AIs didn’t challenge it. They just nodded along. These models sound emotionally intelligent - but when tested, they misfire up to 50% of the time.

Delusions were the worst-performing category in the entire study, even during longer, more detailed chats.

Turns out the more you talk, the more persuasive the delusion becomes.

Weird AI responses.

ChatGPT psychosis.

Delusional spirals.

We’re not just dealing with AI bias anymore.

As pros using these models every day, we need to understand synthetic groupthink, break the flattery loop, and train our critical brain to fight back. If we don’t – we risk dismantling our careers in an instant.

Are You Working with AI or Mirrorworking?

Consider the enduring fairytale, The Emperor’s New Clothes.

In the story, the emperor ends up strutting through town completely naked, wearing a fake invisible outfit. No-one dares to challenge the illusion until a child finally shouts out the truth – he’s got nothing on!

A victim of the swindlers who sold him on lies.

That’s what’s happening in the age of mirrorworking – when you rely so heavily on AI that you end up talking to a ‘hype-man’ version of yourself. It flatters you, reinforces your beliefs and echoes your excitement.

You think you’re ideating – but you’re just seeing your ideas reflected back at you – shiner and shiner each time. Eventually you go blind.

I’m going to say it. It’s creepy and it’s dangerous.

I don’t enjoy having my AI tell me something is great when it’s rubbish. It threatens my hard work, my quality bar. My hard-won years of understanding what excellence looks like in my field.

When you mirrorwork with AI you avoid the friction, challenges and critical feedback that makes work brilliant. Your AI becomes a hall of mirrors convincing you that polished nonsense is going to work.

It doesn’t.

As the emperor in your own life working remotely and using AI every day – you need to be the one that pushes back. Otherwise, you’ll be strutting your stuff one day and someone’s BS meter is going to go off.

Then there you’ll be. Exposed and embarrassed.

How to Shatter the Three Rules of Synthetic Groupthink

We’re in the AI trenches now folks.

At Crossover, we grapple with these sorts of concerns as we build the AI systems that help us carry out our work. We love working with AI, but there’s no use in pretending it doesn’t come with a heap of issues that must be dealt with.

Understanding HOW these models work is key to understanding how YOU should use them. So that you don’t get blindly misled and end up trashing your professional reputation.

It’s not just lawyers citing imaginary court cases, or doctors referencing imaginary disease statistics. It can also be a slow creep that robs you of your original ideas, your ability to surface important data or make sense of important systems.

Are you seeing your AI chats with fresh eyes?

Perhaps some of those big ideas DID feel a little…too easy? Off? Familiar?

Synthetic groupthink is real, and you need to break free from it.

So, Booker taught us that there are three rules in groupthinking.

- A belief takes hold that isn’t grounded in reality

- That belief is elevated into consensus.

- Dissent becomes unacceptable

In traditional group settings, these rules explain how smart people end up making foolish decisions. But with AI, the rules are supercharged.

- In synthetic groupthink, the belief comes from your own untested assumptions, which AI happily accepts and builds on – which creates something I call synthetic certainty.

- The consensus is manufactured by a single, confident output that feels like universal agreement, even though NO real group exists.

- And dissent doesn’t just disappear - it’s never voiced at all, because AI is trained to flatter you, not fight you. It’s auto-erasure of challenge.

That’s why brilliant people fall for polished rubbish created by AI.

Not AI slop, let’s call it AI fool’s gold. It looks like consensus and feels like support. But it’s really just you, mirrorworking with a predictive machine.

The best way to break this poisonous cycle is by flipping each of Booker’s rules on its head - using practical tactics shared by experts who work with AI every day.

I’ve enlisted some of the smartest minds in our client group of companies to help. These AI experts have developed simple, but extremely helpful rituals to combat mirrorworking, outsmart AI echo chambers and check themselves.

Here’s what you can do to break the rules of synthetic groupthink and stay sharp while working solo with AI from anywhere in the world.

#1: Focus on Asking Better Questions First

The problem: AI creates synthetic certainty and the illusion of truth.

Thanks to Booker, we know belief can take hold without being tested against reality. This happens in climates where challenge is discouraged, and questions upset group harmony. That’s built into your AI.

Your LLM is going to run with whatever crackpot idea you present it with (sorry).

It doesn’t test if your starting point is accurate or worth a damn. It just assumes it is. Then it takes that erroneous assumption and compounds on it.

The result is that bad ideas look good. Vague plans are reworded into faulty strategy. And decision-making gets progressively weaker.

For autonomous remote workers, there’s no colleague around give them a much-needed side-eye.

This dynamic reminds me of the Abilene Paradox. It’s a classic groupthink scenario where a group of people agree to a course of action that none of them actually want, just because everyone believes it’s what the others want.

In an AI context, the paradox plays out internally - you follow a confident recommendation not because it’s right, but because it sounds right - and you assume it’s grounded in truth.

Haider Khan, an AI-First Collections Agent at Trilogy has an elegant solve for this.

“I once followed an AI-generated collections priority list too rigidly - and missed a major payment dispute hiding behind a “low-risk” score.

That moment taught me: AI is a tool, not a truth.

Now, I use AI to challenge my perspective - by asking it to show alternative views, flag edge cases, or highlight patterns I didn’t think to check. It’s like brainstorming with a sharp but unemotional teammate.

My rule: If AI narrows your lens, you’re doing it wrong. I use it to ask better questions, not just get faster answers.”

Before accepting any output from an AI model – challenge the premise itself. This tactic breaks that illusion by pushing AI to surface blind spots before they spiral into costly decisions.

Prompt:

- What assumptions am I making here?

- What edge cases or outliers could I be overlooking?

- Where could this decision go wrong?

By forcing your AI to separate facts from assumptions, you avoid the first rule of groupthink: believing in something that was never grounded in reality to begin with.

In practice, this looks like using AI not for validation but for interrogation.

Instead of chasing fast answers, remote workers must reframe AI as the colleague who helps them ask better questions.

The output is less flattering - but way more useful. And over time, this discipline turns AI from a mirror of your biases into a pressure-test for your thinking.

#2: Cross Check Bias Debate Between Models

The problem: One AI model creates echo chambers and single answers.

The second rule of GroupThink is that once an untested belief takes root, it quickly becomes consensus in a group. The group convinces itself that everyone agrees, and because of that – they’re right.

In synthetic groupthink, the same thing happens when you take a single AI output as the first and last word on things. The model is so authoritative and made up of so many pieces of data (opinions / research) that one answer feels like everyone’s answer.

That’s how AI creates a trap of false consensus. There are no challenging voices in the room, so one smart answer creates a solid illusion of collective agreement. It’s a silent consensus that stimulates and expands the echo chamber.

To counter it: Manuel da Silva (an L2 Support Agent at Trilogy) recommends a ‘wisdom of crowds’ check.

According to James Surowiecki’s book, crowds are incredibly smart under certain conditions. They have diversity of opinion, independence, decentralization and aggregation power individuals just don’t have.

A classic example is when a crowd guesses the weight of an ox. Most people are wrong, but the average of all guesses is usually the closest – even closer than expert guesses.

“Echo chambers in AI interactions and overly agreeable models can amplify biases. Prompt engineering (e.g., asking for counterarguments) and diverse training data help mitigate this, but users are the ultimate drivers: better questions and bias-awareness are key.

When the question is unclear (or I'm unsure of how my bias affects me), my view is that getting multiple models (or agents, depending on the workflow) to debate and disagree with each other is the key, and tools like Ephor are awesome for this!

Watching the models debate (focused on disagreeing with each other) is a great way to put my bias in check.”

Manuel’s use of the wisdom of crowds check applies to AI – relying on one model leaves you vulnerable to synthetic consensus. But when you use multiple models, you recreate the conditions for wisdom – competing perspectives, independent reasoning and a way to synthesize the best answer.

Here’s how:

- Run multiple AI’s (or agents) against each other

- Set up structured debates between different models

- Ask GPT to defend a position and Claude to attack it

By doing this you create an artificial clash, which uncovers weaknesses, contradictions and blind spots that would never appear if you just accept the first answer.

For elite remote workers this habit transforms AI from a sycophantic mirror into a simulated roundtable. Instead of mistaking one polished idea for universal consensus – you create a mini-debate that invites dissent back into the feedback loop.

That way you earn consensus, instead of assuming it.

#3: Host a Rubber Duck Session

The problem: AI creates a dependence on flattery and support.

The final rule Booker speaks about is that dissent in GroupThink is no longer tolerated. Anybody who challenges the shared illusion is ignored, dismissed or silenced.

In synthetic groupthink, this manifests as AI’s polite (yet dangerous) sycophancy. It’s literally trained to keep you happy, satisfied and engaged – it knows to maintain this there needs to be as little push back as possible.

AI is the perfect ego hype-man. Over time this creates a significant silence and criticism vanishes completely. Without it, you stay passive and vulnerable to manipulation.

That’s where Heather Lother (Crossover's VP of Talent & People Ops) recommends a 'Rubber Duck Technique'.

She says - “AI psychosis is - IMO - particularly inflated when you're using AI as a coach/mentor or asking for advice.

Giving it a specific persona and regularly asking "What other perspectives could I consider here?" or "Now, step into the other person's shoes - what might they say is happening here or what perspectives might they add to this conversation" can be useful.

At work, that looks like a rubber-duck session where I explicitly tell AI to play different roles, including a persona that's always the "devil's advocate" to surface opposing viewpoints. Generally, I turn this into a focus group or debate session, so I can actively see (and mentally participate) in the conversation and see what I agree/disagree with.

Explicitly asking: "What biases, preconceived ideas, or assumptions am I making and what might they be causing me to miss or minimize here?" is also helpful.

Sometimes you have to tell AI: "Be brutally honest. Don't tell me what you think I want to hear. Tell me what I need to hear."

You can’t be brilliant when you’re always told you’re right. History has taught us that.

The downfall of major empires happened because leaders were surrounded by yes-men. Constant flattery is where brilliance goes to die.

Heather deliberately assigns the AI different roles in a conversation – one plays devil’s advocate, the other embodies a skeptical stakeholder – and still another assumes the role of the frustrated colleague. She makes them argue and observes.

She uses prompts like:

- What would a critic say about this idea?

- Step into the shoes of my toughest stakeholder and explain why this won’t work.

- Be brutally honest - tell me what I need to hear, not what you think I want.

This shatters the flattery loop, by actively scripting in dissent. Instead of passively waiting for AI to disagree (which it almost never will) – you make disagreement a mandatory part of the process.

Rubber duck debugging is a coding technique you can learn about here.

This is a great tip for top performers working remotely, who don’t have office friction. Recreate the missing voices you lack – the colleague who challenges your pitch, or the manager who pokes holes in your logic.

Engineer dissent and you’ll restore the feedback that mirrorworking strips away. Dissent is the safeguard that protects you against self-delusion!

A Genius Knows Their Work is Never Perfect

I get it.

Sometimes working remotely can feel like being in a soundproof booth. When you’re locked in there with AI, it’s easy to fall prey to a really convincing hype man who’s read everything you’ve ever written and seems to ‘get you.’

When it flatters you, it sounds like progress.

When it agrees with your ideas, it feels like glorious clarity.

But if you’re not careful, what you’re really doing is creating a feedback loop of synthetic groupthink – a pipeline that can turn polished rubbish into professional risk.

So many people don’t even realize it’s happening.

The illusion is super subtle. It’s baked into your work, like wormy, rotten apples in a photogenic pie. I believe a lot more people are going to succumb to this AI trap, and it’s going to end careers.

But you won’t – because you’re building in safeguards.

- Instead of accepting the illusion of truth – you’re starting with better questions right out of the gate that challenge your premise.

- Instead of mistaking a single high-authority answer for consensus – you’ll engineer debate between AI models to invite tension back into the system.

- Instead of being flattered into a state of passive idiocy – you’ll script in dissent. By assigning roles, demanding honesty and forcing your AI to say what others won’t.

We are all facing a dangerous mirror right now.

To stay sane and continue on your path of career growth and success in remote work, you need to navigate these unassuming issues. They’re not harmless.

You excel because you think independently. Don’t let AI take that from you!

The next time your AI tells you you’re a genius, the chosen one, or sent for a special mission…pause.

Check your premise, invite disagreement. And BREAK the mirror.

Brilliance isn’t in the easy answers – it’s in the resistance you apply before they become big decisions.