Contents

- Skill #1: Agentic Judgment

- Skill #2: AI Operational Discipline

- Skill #3: AI Governance by Design

- AI Engineering and Technical Management Skills for 2026

AI engineering and technical management is changing - and many leaders aren't prepared for what's coming. As AI gains autonomy across your technical operations, you're facing management complexity that didn't exist just a couple of years (... or months) ago. From agentic judgment to governance by design, in this article, we're breaking down 3 critical leadership skills separating the leaders scaling AI from those struggling to contain it.

Your AI engineering and technical management skills will make or break effective leadership in 2026.

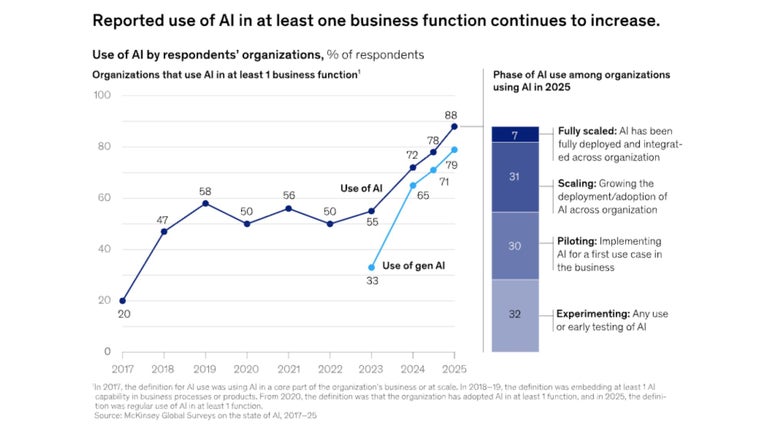

Organisations continue to report an upward trend in AI use (>1 business function) - with use of AI growing from 78% to 88% in 2024-2025. And, within development circles, the shift is even more visible - with 84% of developers now using or planning to use AI tools in their workflows.

There's no denying that AI has arrived. But it’s brought with it an important question: What skills do great leaders need to guide their teams into the AI age?

Leaders already understand the scale of AI’s impact on productivity, speed, and innovation. But many still lack the skills needed to translate that potential into real, durable outcomes.

By 2026, your leadership advantage will hinge on how well you manage autonomous agents, how effectively you operationalize AI at scale, and how intentionally you embed governance into the systems themselves.

The year's underway, and it's time to get up to speed.

Worried you don’t have the AI engineering and technical management skills to lead your team members into the AI age? We’re spilling the tea on 3 essential AI skills every leader needs to build for 2026 and beyond.

Skill #1: Agentic Judgment

AI agents are not yet mainstream - with 52% of developers either not tapping into them or opting to stick with simpler tools. But with most developers (52%) agreeing on AI's ability to boost productivity, the transition beyond manual chat toward autonomous agentic systems - systems able to plan, decide, and act to achieve goals - will happen.

But that new autonomy comes with fresh challenges.

As AI systems gain autonomy, leaders must develop the skill of agentic judgment. The mix of technical know-how and strategic thinking needed to decide when autonomy is a good fit, how much autonomy an AI system should have at any given moment, and to continuously reassess whether a workflow still matches its constraints and delivers on its goals.

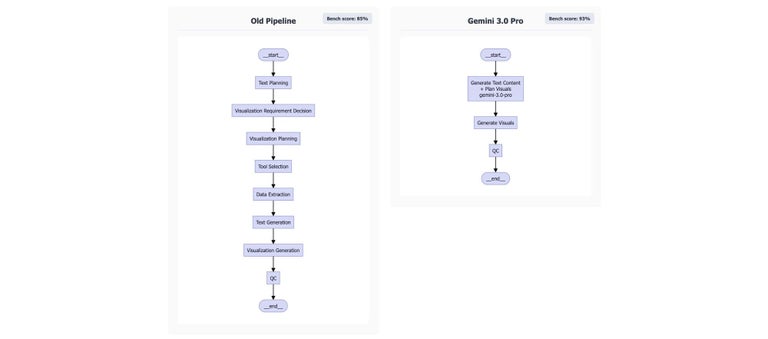

I'm reminded of a post by Praveen Koka - Trilogy's VP of AI - from late 2025. In the post, Praveen described a content-generation system he was working on at the time. The system had grown into an eight-stage agentic workflow to compensate for the limitations of earlier models like Claude Sonnet 4.5, GPT-5, and Gemini 2.5.

After two weeks of tinkering and incremental tuning, the system had inched from an 81% to an 85% success rate. At that point, Praveen stepped back and asked a question that (dare I say) most would have missed: was the complexity and orchestration underpinning the system still justified?

He made the call to collapse six stages into a single call using Gemini 3.0 and test what happened. And the result was BIG:

“I’m not joking - my pipeline went from 85–93%, all with a dumb prompt. That was so remarkable that I actually sanity checked a large sample of the output, and it actually fit the quality bar.”

This is agentic judgment in action.

Praveen understood why the original system existed, recognized when its assumptions no longer held, and had the discipline to question the orchestration itself. Instead of defaulting to complex solutions to complex problems, he reassessed autonomy, tested a simpler path, and validated the outcome against a real quality bar.

Technical Know-How + Strategic Thinking

Agentic AI will introduce a new class of leadership decisions - not about prompts or tools, but about how much structure, autonomy, and oversight a system truly needs. The leaders who’ll succeed will be those who can effectively calibrate autonomy when models, constraints, and business goals change.

Skill #2: AI Operational Discipline

Most AI initiatives fail because they weren't operationalized with the same rigor as other critical infrastructure. And as AI systems move from experimentation into full-scale operation, some of the biggest operational risks leaders face are reliability, latency, and cost.

- AI Hallucination – 78% of AI research labs now place reducing hallucinations as a top three priority.

- Unclear Costs – Companies projected a 36% increase in AI spend per month, but only 51% say they can confidently measure what they’re getting back

- Speed Matters – Humans can perceive delays as short as 100 milliseconds - anything slower, is noticeable

In 2026, successful AI will be treated like infrastructure. And that will require good leaders to develop AI operational discipline - the ability to understand, at a leadership level, how AI systems behave across the organization throughout their lifecycle.

This is all about knowing where systems break and where costs compound.

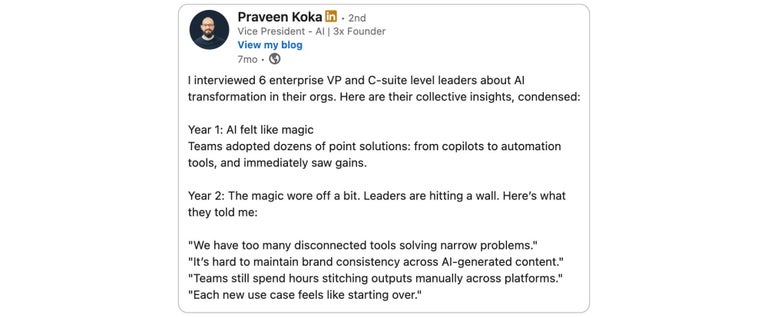

In another of Paveen's LinkedIn posts - following a set of interviews with multiple enterprise VPs and C-suite leaders navigating AI adoption - this is the exact challenge that surfaced.

The pattern was consistent: early wins gave way to frustration as systems scaled.

Leaders with AI operational discipline look beyond novelty or theoretical accuracy and optimize for outcomes, reliability, and sustainable cost. They know that every extra call, retry, or agent introduces latency and spend - and that unmanaged AI costs eventually surface as board-level concerns.

This year, leaders who treat AI as a side experiment will be forced to reckon with it as infrastructure. But those who build operational discipline early will be the ones able to scale it safely and profitably.

Skill #3: AI Governance by Design

Governance policies, principles, and checklists are essential, but they're fast becoming table stakes. As AI systems gain more autonomy, governance has to be embedded directly into the system itself.

Responsible AI is not optional or theoretical.

AI governance by design covers the ability to ensure AI systems are transparent, auditable, and secure by how they are built, not how they are described. For leaders, this is about designing systems that reduce risk while still enabling speed, efficiency, effectiveness, and scale.

At a practical level, AI governance by design means leaders must:

- Define where AI is allowed to act autonomously and where it must defer to humans

- Encode risk classification and escalation paths into workflows

- Build trust by ensuring every automated decision has a clear owner and audit trail

- Design systems that can slow down, stop, or escalate when risk increases

I recently read a strong example of this in an article by technologist David Proctor posted to the Trilogy AI Center Of Excellence substack. Rather than treating governance as a bureaucratic bottleneck, Proctor reframes it as something that should live directly inside the delivery pipeline.

In his model, AI is used to classify changes by risk, generate structured change briefs, and automate approvals for lower-risk work. Higher-risk changes are automatically escalated for human review, complete with AI-generated context covering blast radius, rollback plans, and go/no-go criteria.

Crucially, the system Proctor describes does not remove human accountability. He makes governance faster by making it more explicit.

As he warns, the goal isn’t to eliminate oversight, but to move it:

“...out of the calendar and into the pipeline, with AI doing the boring parts.”

Leaders applying governance by design don't manage risk through policies alone. They encode that management into their organization's workflow - allowing AI to accelerate execution where it’s safe, and leaning on expert judgment where it’s not.

In an era of autonomous systems and rising regulation, governance is the mechanism that makes AI possible. But AI governance by design makes it scalable.

AI Engineering and Technical Management Skills for 2026

AI is fundamentally changing the way we work. And it’s something leaders need to think very carefully about.

But there’s no shortcut.

Effective leaders will need to invest time, build new instincts, and rethink how technology, people, and process fit together. But those developing leadership skills like agentic judgment, operational discipline, and governance by design will shape how their organisations evolve - allowing them to move faster, scale more confidently, and deliver better products and services than ever before.

As AI outpaces traditional cycles, your real leadership advantage lives and dies on the skills that allow you to think one move ahead. Time to start building.

Do you have the skills to lead in 2026? If so, our partners are on the lookout for pros like you!