Contents

- The Rise of the Cheatbot

- How Serious Schools Respond

- ChatGPT Cheating: Tough Reality vs. Rosy Possibility

ChatGPT cheating is the education crisis everyone's talking about - and most schools are woefully unprepared to respond. In this article, we're pulling back the curtain to reveal the inevitable rise of the 'cheatbot', and how a handful of elite schools are adjusting to thwart the AI cheating challenge. From AI bans to next-gen assessments, this is how AI moves from unavoidable cheat machine to enhanced educator.

ChatGPT cheating is the front-of-mind challenge schools everywhere are facing right now.

After more than a century of dusty chalkboards and lecture-style lessons, schools are finally staring down a classroom update that genuinely has the potential to change the face of education. An update that's set to make teaching and learning more personalized, teaching more manageable, and bottom-line outcomes significantly more meaningful for student's lives.

In a recent post to her Substack, MacKenzie Price - Co-Founder of Alpha School - shared her perspective on just how important this moment is for the industry:

But with great power comes great responsibility.

AI has already shown itself to be a world-altering force that IS changing how we teach our kids. And it's a level of change that has every future-facing school worth their salt asking the same question: how do we embrace AI without breaking education in the process?

The choices we make today will decide whether we replace the outdated classroom with something radically better, or hand kids a ticking time bomb that leaves them less prepared than ever.

Worried about rising ChatGPT cheating cases? We’re spilling the tea on the cheatbot apocalypse - and how smart AI-amplified schools are avoiding it.

The Rise of the Cheatbot

There’s a world of difference between AI chatbots where the AI generates answers, and purpose-built AI tools designed to enhance learning. Problem is, most people don’t know that difference exists.

Most run-of-the-mill LLMs that students (and teachers) have access to - think ChatGPT, Gemini, Claude, Grok - were never built with pedagogy in mind. These are machines optimised to produce answers, not build understanding.

Heck, even ChatGPT’s new custom-built Study and Learn Mode - a mode explicitly designed for, you guessed it, studying and learning - is more an example of fancy footwork on the backend (instruction tuning and system-prompt steering) than any kind of fundamental update in what the tool is actually for.

This may seem harmless at first glance, but that answer-first design has two major impacts when applied in the classroom:

- It removes challenge from inquiry (direct question → answer)

- It creates an illusion of work done (“I worked on this with the LLM…”)

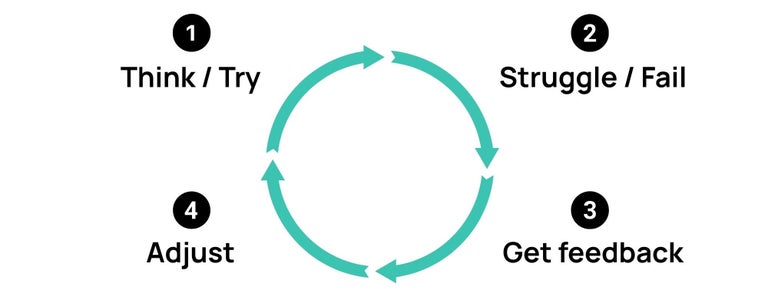

Put those together and you get a mouth-watering shortcut that feels like efficient work, but actively undermines the all essential learning loop.

Scary truth: When a tool can mimic understanding, students can unwittingly submit ‘competence’ without building it.

And that's the path many students are choosing.

- AI Is in Use - 86% of students now use AI for school or personal use

- AI Is Influencing Work - 16% of students use AI for essay writing, while 21% admit to using it to help them pass exams

- Permission Is Secondary - 46% of students say they’ve used AI for schoolwork without their teacher’s approval

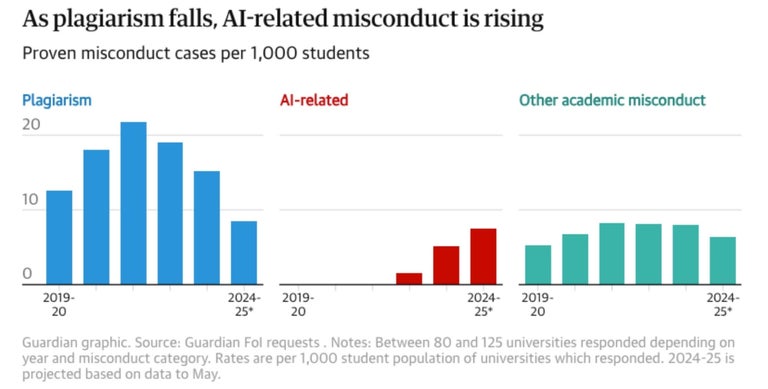

And we're not talking small, one-off infractions. A recent academic integrity survey of the 2023-24 school year uncovered nearly 7,000 confirmed cases of AI-related cheating. That works out to more than 5.1 cases per 1,000 students - more than 3X the 1.6 per 1,000 students just one year earlier.

Students are using AI to cheat. It's time to decide what comes next.

How Serious Schools Respond

When AI cheating enters the conversation, most people immediately reach for detection tools. Unfortunately, that instinct leads straight down the wrong path.

AI detection fails for a couple simple reasons: the technology evolves too fast, assessments focus too heavily on final output, and, quite frankly, modern AI produces work that's just too convincing. Add overloaded teacher schedules and poor AI training into the mix, and the entire detection-based approach collapses under its own weight.

We’ve already gone deep on this elsewhere (check out our article Why Spotting an AI Cheat Is Harder Than You Think), so we won’t rehash it here. But it’s important to call out the dead end before moving forward.

The simple truth is that the schools getting AI right aren’t trying to catch cheaters. They’re changing the system so cheating becomes the harder option.

And that update typically comes down to four core actions.

1. Banning ChatGPT (and Looking Beyond)

We've said it once and we'll say it again... the AI tools most students default to were never designed for learning. So, elite AI-amplified schools BAN THEM.

But don’t make the mistake of thinking banning mainstream LLMs means banning AI altogether.

Serious schools follow these bans with purpose-built AI tutors. Powerful tools designed to adapt to learners, adjust pacing, and personalise instruction in real time.

Alpha is the gold standard here. Using adaptive tools like AlphaRead and AlphaWrite, students receive AI-powered, personalized learning plans that dramatically accelerate literacy and language development.

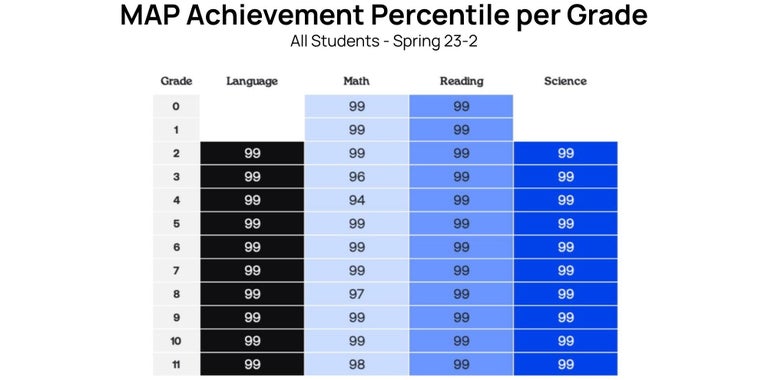

It's tools like these that consistently push Alpha students into the 99th percentile nationally, with only 2 hours of core academics a day.

So yes - ban the wrong kind of AI. But then follow it up with the right kind.

2. Teaching AI Literacy

Bans without explanation don’t eliminate bad AI use. They just push it underground.

That’s why AI literacy matters.

Students need explicit instruction on how AI works, where it helps, where it harms, and how to use it responsibly and critically. Ethics, transparency, security, and judgment aren’t optional add-ons - they’re core skills in the 21st Century curriculum.

In practice, that might look like guided AI-assisted research sessions, structured prompt-writing exercises, or explicit reflection on where AI helped - and where it failed. Whatever the mechanism, students need to learn when AI is appropriate, how to use it as a thinking partner rather than a replacement, and how to document their use transparently.

The goal isn’t blind trust or blanket fear. It’s building informed judgment through repeated, correct use.

3. Rethinking Assessments

AI is an answer engine. So, if high schools want to make ChatGPT cheating irrelevant, they need to redesign assessments so that thinking matters more than a one-line solution.

With AI, proof of process matters a lot more than the final result.

In practice, that means assessing things like:

- Personal progress throughout the task

- Assumptions made and information discarded

- Decisions and reasoning at each stage

- Choices on where AI is and isn't used

- Successes, failures and adjustments

When process is visible, shortcuts lose their value, and genuine, deep thinking becomes the thing that counts.

Traditional, output-centric assessments were built for a world that no longer exists. It's time to put them to bed.

4. Creating Clear Policies

AI only works in schools when everyone - students, teachers and parents - understand the rules of the game.

Clear policies are the foundation on which everything is built. Without them, confusion reigns and misuse will thrive.

At the most basic level, schools need to be able to:

- Define when AI assistance is permitted

- Specify how AI tools may be used

- Clarify what counts as cheating versus ethical support

- Require transparent disclosure of AI use

This is where education shifts from prohibition to guidance.

ChatGPT Cheating: Tough Reality vs. Rosy Possibility

There’s no denying it: AI has introduced an entirely new way to cheat, and schools need to take that reality seriously.

But that doesn’t make AI the villain of the classroom.

For the first time - possibly ever - schools have access to tools that can deliver on education’s original promise: developing deep thinkers, adaptable learners, and capable young adults prepared for the real world.

Used well, AI can personalise learning, accelerate growth, and free teachers (or guides) to focus on the human support traditional classrooms have squeezed out.

Students will be leaning on AI for the rest of their lives, and schools don’t get to opt out of that reality. And it's their responsibility to make sure learners are equipped to use these tools well, not blindly.

So, is ChatGPT cheating real? Absolutely. Does that mean AI is the end of education? Not even close.

Ready to join the future of education? Our partners are zeroed in AI education, and they're on the hunt for elite thinkers ready to join that mission. Check out what they're looking for, and answer the call.