Contents

- Challenge #1: Unreliable AI Detectors

- Challenge #2: Model-Detector Cat-and-Mouse

- Challenge #3: The Unpayable Human Tax

- Challenge #4: Flawed Assessment Design

- The Solution: Smarter Assessments, Not Smarter Detection

- Stop Chasing AI Cheats - Make Them Irrelevant

Think catching an AI cheat is about getting better detection tools? Turns out, there is no such thing as reliable detection in the AI age. In this article, we'll expose the four challenges - from unreliable technology to the impossible burden of judgment - making AI cheating virtually undetectable. Plus, how one strategic reframing could render the detection game obsolete...

They say you can catch an AI cheat if you just have the right tools. But those tools don't exist.

We’ve taught generations of teachers to spot cheaters in the traditional classroom. But now they've found themselves in an AI-showdown equipped with underpowered tools and untrained eyes.

The whole idea of 'detection' collapses under a discerning eye. The tech fails to live up to its glossy marketing. The pace of AI races ahead of every safeguard. The human cost pushes teachers past breaking point. And classic assessments are relics from another century.

AI in education is a new world. And you can't solve tomorrow's challenges with yesterday's methods.

Want to understand why spotting an AI cheat is harder than you think? We're here to spill the tea on the 4 challenges that make detection near impossible. And share one strategic adjustment that makes the WHOLE AI detection game obsolete.

Challenge #1: Unreliable AI Detectors

AI detection tools aren't immune to bias.

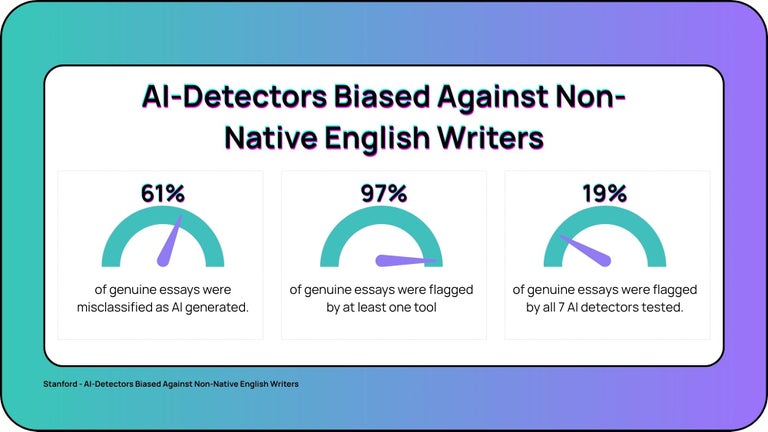

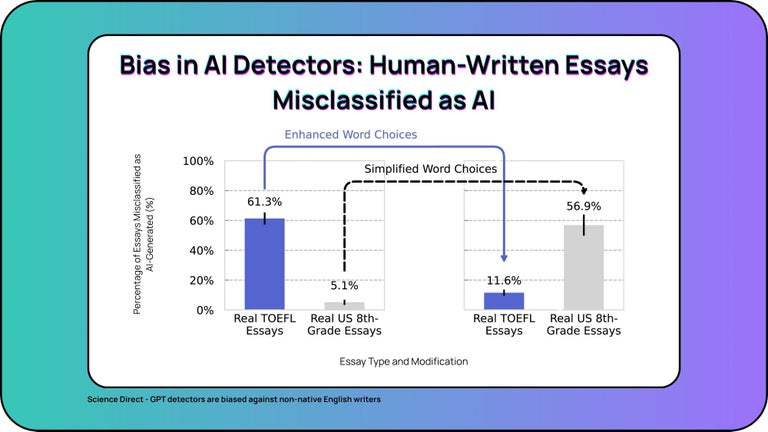

A recent Stanford study testing authentic essays written by non-native English students found that the majority were wrongly flagged as machine-generated.

Over 61% of genuine essays were misclassified, nearly all were flagged by at least one tool, and around one in five were unanimously judged 'Artificial Intelligence' by every detector. These were real students writing their own work. Their only *ahem 'crime' was writing in English as a second language.

The reason detectors get AI so wrong is structural.

Most AI systems have been trained to equate literary complexity with being 'human.' That means simpler phrasing, plainer vocabulary, or different grammatical rhythms - the very patterns common in non-native writing - are frequently flagged as machine output.

For all intents and purposes, students are punished for how they use language, not for misconduct.

And the neurodivergent among us face a similar hazard.

Emerging evidence shows neurodivergent learners are more likely to be misclassified because their writing styles often fall outside conventional patterns. Putting already-marginalized students directly in the crosshairs.

Let's follow the logic through.

If a system predictably misclassifies students, the ethical response is to audit the decision. But most AI detectors work on a black-box system. They don't explain their reasoning and provide little to no insight beyond a final score.

The result? A compromise NO good teacher would ever make.

Because they can't flag a cheater if they can't trust the detector's decision.

Challenge #2: Model-Detector Cat-and-Mouse

Models and detectors are locked in an unwinnable game of cat-and-mouse.

A new language model takes a leap forward. Detectors adjust. Repeat.

Here's the issue: NO ONE can predict the next leap forward in AI.

That puts detectors at a perpetual disadvantage. Cursed to chase a moving shadow - only able to react after the fact.

And the detector disadvantage has become painfully obvious.

In one recent study, researchers asked ChatGPT 3.5 - a model that doesn’t even hold water on today’s cutting-edge systems - to paraphrase AI-generated text. That simple ask was enough to cause false negatives to spike across every major detector tested.

That's yesterday’s model walking straight past today's gates...

Stretch the logic. If slight paraphrasing with an older model is enough to expose the cracks, what does that say about the new models producing even more sophisticated, human-like text?

At some point, guided AI writing will become indistinguishable from human output. And when that happens, the chances of any detector catching them collapse from slim to exactly zero.

That’s the rub: if detectors can’t reliably flag what’s in front of them, then AI cheaters WILL slip through unseen.

Challenge #3: The Unpayable Human Tax

When detectors fail, teachers are forced back onto their own judgment. But human judgment isn’t a clean substitute.

Spotting AI content requires specialized training that many educators simply haven’t had. In fact, as of 2025, it's estimated that nearly HALF of educators have yet to receive AI training.

And that lack of preparation collides with a second reality: time.

Fair judgment requires evidence - collecting drafts, holding one-on-one conversations, checking earlier work. It’s the classic burden of proof.

But proof is a slow, heavy process that assumes space teachers just don’t have.

Full-time teachers already report working over 52 hours a week, while leaders are closer to 57 hours. And from all that unpaid overtime emerges 41% of teachers describing their workload as unmanageable, and another 37% reporting that it’s only just manageable.

So what happens? Suspicion lingers, but proof is out of reach.

Honest students are left under a cloud of teacher doubt, while teachers are pushed toward snap judgments they don’t feel confident in. Trust erodes on both sides.

The result is unreasonable for educators and unfair for students. A reality none deserve.

Challenge #4: Flawed Assessment Design

Traditional assessments were never designed with AI in mind. And that blind spot makes them dangerously easy to game.

Most academic tasks still weigh the final product above everything else. An essay summarizing information. A report weaving together broad, predictable themes.

Exactly the kind of output that generative AI specializes in.

By putting the bulk of weight on the polished output, assessments give teachers almost no visibility into how the work was created. And that blind spot is wide open for exploitation.

A student can generate a draft with AI, make light edits, and hand it in. To the teacher, it looks like legitimate effort. But the process - the thinking skills, the struggling, the refining - is invisible.

And this is where it ties back to our earlier point. Just like ChatGPT 3.5 paraphrased its way past every major detector, AI-generated drafts can pass a 'sniff check' without ever exposing the fact that no human process sits underneath.

When the system rewards outputs while ignoring process, it kills the paper trail that proves effort.

The Solution: Smarter Assessments, Not Smarter Detection

As promised, here’s that one strategic reframing that renders the detection game obsolete.

The answer isn’t better detection. It’s better design.

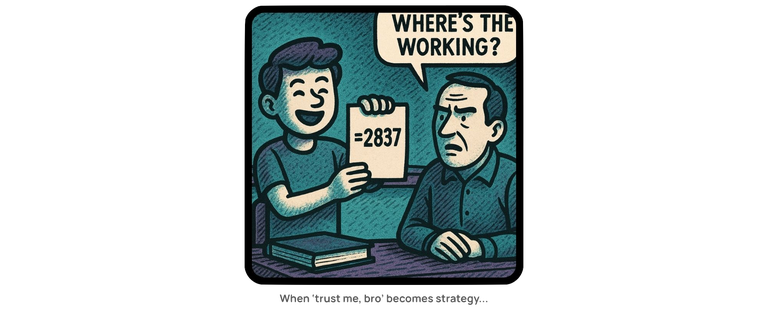

Think of the calculator. Even before it arrived, math students were expected to show their steps. The arrival of the calculator made those steps that much more important. The final answer still mattered, but it mattered much less than proof of process.

That’s exactly where ALL education needs to move today. If AI can accelerate the work, the safeguard has to be the working out.

You need to teach your students to map and track their process.

What assumptions are they starting with? What role might AI play? Which prompts shaped their direction, and what debates played out along the way?

They also need to get radically honest about their missteps - the rejected drafts, the assumptions that didn’t hold up, the hallucinated facts they caught and decided to drop. They're all important parts of the process.

The real evidence of learning isn’t in the polish at the end. It’s in the reasoning behind what was accepted and why.

That’s what proves ownership.

You'll need to adjust your rubrics, too. Because you can't hold the gloss above all else.

So start moving the weight to judgment, verification, and intellectual honesty. Just like with math, the polished final product can still matter - but it can’t be the biggest piece of the puzzle.

This reframing will change your classroom.

You'll stop wasting time on detective work because the proof will already be built into the submission. And you'll protect your honest students by making their effort transparent.

Most importantly, you'll be teaching the kind of AI-enabled thinking skills your students will carry with them into the real world. Planning, problem solving, discarding, refining. Not outsourcing thought to a machine, but using it as a genuine thinking partner that helps them solve problems.

Teach the future-proof skill of mastering AI.

Stop Chasing AI Cheats - Make Them Irrelevant

AI cheat detection doesn't work because it can't work.

The tools are biased against the students most vulnerable to false accusations. Detectors are playing a game of cat-and-mouse blindfolded. Teachers don't have the time or training to fill the gap. And traditional assessments were designed for a world without AI.

But the real issue isn't that detection fails. It's that we're starting with entirely the wrong question.

We need to stop asking 'how to catch AI cheats?', and start asking 'how can we make cheating irrelevant?'

And that falls on smarter assessments.

When assessments reward visible thinking skills - drafts, reasoning, AI critiques, synthesis over polish - the incentive to hide AI use collapses. AI becomes a thinking partner, and mental outsourcing becomes the obvious weaker move.

So stop chasing AI cheats. And start building smarter assessments that work the right muscles. Because training your students to use AI as a genuine thinking partner is a future-proof skill that will stick for life.